/ 73 min read

This is my take on ARC 2025, a review of the literature, some opinions and attempts to categorize and conceptualize the various approaches so far, ideas that have been discussed, as well as what's special about the competition, what might work and what won't work.

If you are new to ARC and want an overview of the various approaches from 2024, I hope this is useful to you as well as me! The first half of the document covers high level approaches and key ideas extracted from various papers and results. The second half provides some more detail (although still fairly high level) on each of the papers that won in ARC 2024, as well as some hand-picked related papers, work and ideas.

Quite a lot of broad knowledge around ML/AI is assumed throughout. I have done my best to link to or explain some concepts, but this will probably be tricky if you don't know some basics around deep learning, and general ML terminology.

I have almost certainly made many mistakes, possibly at a conceptual level, and most definitely in some of my attempts to extract details and ideas out of the papers. There are a few"hot takes" in here too and I've not held back in expressing some opinions of my own - please take these with a pinch of salt, and I'm very open to feedback here.

I have not covered all the literature and read all the papers, there is a lot out there. If you think I've missed something important, please let me know.

An introduction to ARC #

ARC stands for the Abstraction and Reasoning Corpus, it is a benchmark that came out of François Chollet's paper On The Measure of Intelligence in 2019. It has evolved a few times, the benchmark renamed ARC-AGI, and recently an ARC-AGI-2 version was launched in March 2025.

Here is an example of an ARC puzzle. For each puzzle (or sometimes referred to as a task), you are provided with around 2-4 (usually 3) examples of input output grid pairs, and one (but occasionally 2)"test" input grid(s) for which you have to predict the output grid.

The goal is to infer the rule from the examples, and apply it to the test input grids. The puzzles always exist in this same grid world construct, but the space of possible puzzles is massive. The final ARC competition is run against a hidden test set of puzzles, which means a good solution must be able to do some amount of out of domain generalization.

At a high level ARC is designed to test"skill acquisition efficiency", and this would be the simplest way to capture what François defines intelligence as.

The intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks, with respect to priors, experience, and generalization difficulty.

You can read a lot more about the details of the benchmark, the competition and explore the puzzles on the ARC website.

As I will reference these throughout, it's important to note that with both the 2024 and 2025 competitions, there are a few different datasets we care about:

training - fully public, 1000 tasks for v2, should contain puzzles that capture all the essential"core knowledge priors" and submissions are expected (but not required) to train on.

- fully public, 1000 tasks for v2, should contain puzzles that capture all the essential"core knowledge priors" and submissions are expected (but not required) to train on. public eval - this is also a publicly available set of 120 tasks, expected to be used to evaluate and iterate on solutions locally, it could also be used as training data for private eval or test submissions.

- this is also a publicly available set of 120 tasks, expected to be used to evaluate and iterate on solutions locally, it could also be used as training data for private eval or test submissions. semi-private eval - not publicly available, 120 tasks, this will be used for leaderboard standings throughout the competition. It is semi-private as some systems have been tested against it that are not sandboxed (e.g service provided LLMs) and there is a possibility of data leakage.

- not publicly available, 120 tasks, this will be used for leaderboard standings throughout the competition. It is semi-private as some systems have been tested against it that are not sandboxed (e.g service provided LLMs) and there is a possibility of data leakage. private eval (test) - this is fully hidden, solutions will only run against it once at the end of the competition for final scoring, leakage is highly unlikely.

What makes ARC special #

Out of domain generalization

When it comes to the private test set your solution program will be presented with new puzzles that it just hasn't seen before. This is a significant difference from many benchmarks, great ingenuity and creativity went into constructing these puzzles and while they may be composed of common elements, transformations, core knowledge priors that are present in the training set, they are ultimately different problems.

People seem to often be surprised by this and just how different the test problems are! Solutions that could easily overfit to the training set could end up scoring 0% on the hidden test set.

This is, I believe, the most important thing to understand about ARC. The challenge here is to generalize out of the domain of the training dataset in a way that most Deep Learning often fails to.

The outcome of this is that some form of test time adaptation is absolutely crucial for getting a good score on the benchmark, and we'll see this as a major theme in the results from 2024.

Compute efficiency

The second most important thing to understand about the challenge comes back to Francois' definition of intelligence -"The intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks...".

Efficiency is an explicit part of the challenge. There are deliberate compute bounds for submissions, effectively in terms of FLOPs. You have a machine with a fixed specification and a capped running time. You must use this compute efficiently to succeed. Unrestricted scaling is not what the benchmark is for.

And as with the above, this is the point! It's easy to critique this and say, well if we got GPT4.5 or O3 and threw $1M of compute at it we could solve ARC (kind of like how O3-high basically did that for ARC-AGI-1 in December 2024). But, that is not very efficient [ 0 ] skill acquisition, and we already know in principle that we can get logarithmic returns on inference time compute.

Without efficiency as an explicit goal, ARC would simply not be an interesting benchmark.

A baseline learning architecture for ARC #

Given the context on ARC, it is hopefully clear why you can't"just" throw any pre-trained model using a standard deep learning architecture at it. People have tried, and it doesn't work well (with a carve out for thinking models, discussed below).

At the other end of the spectrum we have a whole category of approaches that come from the world of discrete program search (also referred to as program synthesis, and sometimes program induction). One of the nice things about coming at ARC from this angle is that we can lay out a (horribly inefficient) architecture of a learning system that should solve ARC with enough compute. This gives me an opportunity to define some useful terminology for the rest of the post so let's lay it out!

Let's start by making the very reasonable assumption that it is always possible to write some program that can capture the rule of any given ARC puzzle, and produce the output grid from the input grid, more formally:

There exists a Turing-complete language L , that can represent all possible programs P that map from some representation of a grid X to grid Y .

This must be true if we accept things like the principle of computational equivalence or the notion of Turing-completeness. An obvious (but perhaps not the best) candidate for such a language would be a modern turing-complete programming language such Python.

The second thing we need to do is be able to enumerate all possible programs P that are formed out of our language L . This is generally possible, even for Python there is nothing stopping us from just listing sequences of bytes as the program source, even if most such programs will immediately fail.

For each puzzle we then:

Find the shortest program within P that solves all the example grid pairs.

Given the above, we know that such a program must exist.

Despite the set of programs P being of infinite size, as we are looking for the shortest such program then provided we can enumerate our programs in at least roughly size order, that is not a problem.

Why the shortest? This comes from a few well known theoretical ideas, e.g the minimum-description length principle, Solomonoff induction, Occam's razor, Kolmogorov complexity, etc. I won't write about this in depth here, you can read about them, but in general we are now also accepting the idea that"the simplest explanation is the best explanation".

This relatively dumb approach will probably work provided a few details are right. The problem is it's horribly inefficient, and we don't have infinite compute. So a significant amount of research is about making this approach more efficient, and there are a whole bunch of ways you could do this:

Creating domain-specific languages (DSLs) for ARC that are more efficient to search and enumerate than a fully general purpose language such as Python, which you will see come up in nearly every paper below, even the LLM based ones

(DSLs) for ARC that are more efficient to search and enumerate than a fully general purpose language such as Python, which you will see come up in nearly every paper below, even the LLM based ones Implementing program enumeration and validation as compute efficiently as possible, perhaps even running on GPUs, or some nicely multi-threaded C code

as possible, perhaps even running on GPUs, or some nicely multi-threaded C code Searching intelligently - within a language, we can get smart about which parts of the program space to search, and this can leverage deep learning, manual heuristics, or building up vocabularies of composable programs, there are many approaches here explored below.

- within a language, we can get smart about which parts of the program space to search, and this can leverage deep learning, manual heuristics, or building up vocabularies of composable programs, there are many approaches here explored below. Optimizing selection criteria - shortest program length might oversimplify a little or have slightly varying definitions, we can also see how our programs perform under multiple augmentations of the data, or even combine multiple discovered correct programs.

Nearly all the top papers and results go after some of the above opportunities, and this is broadly what Francois has been saying is the approach he thinks is most likely to deliver results on ARC - Deep learning guided program synthesis. This is also effectively what he and Mike Knoop started a company to explicitly work on:

(w.r.t NDEA) Our first focus is on deep learning-guided program synthesis to create AGI that can invent, adapt, and innovate

Key ideas and the technical report #

At the end of the 2024 competition the ARC team released a technical report covering top scores, top papers, companies and a summary of approaches. It's relatively brief, but I'll highlight a few things from it, or you can read it: ARC Prize 2024: Technical Report.

The 2024 ARC competition saw a shift away from the dominance of discrete program search approaches that had prevailed in previous years. This year, LLMs became a significant factor, driving both new program induction approaches and transductive approaches, with the crucial introduction of test-time fine tuning. Additionally, ensembling played a key role in improving scores for the top papers.

If we look back at the 2020 competition, the winning result that stood for quite a long time called Icecuber was a fairly dumb brute force discrete program search. LLMs out of the box performed poorly, but, with a slew of test time adaption methods being developed for them they quickly started to take over.

One of the standout claims for me from the report was:

One approach that has not been tried so far (likely because it is technically challenging) but that we expect to perform well in the future, is the use of specialist deep learning models to guide the branching decisions of a discrete program search process - similar to what can be seen in the AlphaProof system from Google DeepMind.

I.e no one has tried exactly what Francois thinks will work. There are certainly many papers that attempt to do something like this conceptually, but are not implemented literally as a specialist DL guided program search (for example, the DreamCoder based approaches).

Smart vs efficient search #

One more highlight from the report:

We also note that deep learning-guided program synthesis does not currently decisively beat DSL-based brute-force program search

You have a spectrum here - search smartly - search fast. No one has come up with a DL model that can effectively outperform a well engineered brute-force search, yet. Your NN and search policy network has to deliver more value than its relatively high computational cost.

There is a good analogy here to progress in Chess/Go. For example in chess, Stockfish has been the most consistently capable chess bot over the last 10 years but used no neural networks or learned search policy, just some heuristics and a very efficient and well engineered search algorithm.

It wasn't until 2020 when that trade-off started to make sense for Stockfish and they moved to some sort of NN based policy. We saw a similar concept behind Go. Heuristic based brute force search was no match for humans until AlphaGo, where the breakthrough was ultimately having deep learning guide the search much more intelligently (and a lot of innovations to actually achieve that).

It seems [ 1 ] that humans search smartly, and probably most people assume that this will eventually be the winning approach for ARC that can"break" the logarithmic returns of a brute-force search, but within discrete program search approaches we just haven't seen this flip that way, yet.

We expect that more efficient program search techniques leveraging deep learning should be able to pull away from brute-force search in the future.

Transduction and induction #

Transduction and Induction are two phrases you will see a lot in the literature and in the rest of this write up, let's discuss them a little.

I've struggled a little with this terminology, and the formal definitions of transductive and inductive learning don't seem to help much. The abstract of the 1st prize paper below captures well what they tend to mean in the context of ARC:

Induction - inferring latent functions

- inferring latent functions Transduction - directly predicting the test output for a given test input

Inductive approaches generally mean finding (learning, inferring, inducing) a function that can solve the puzzle. You try to find a good program, then you apply it to the test input (more along the lines of discrete program search). It offers an explanation for the observed data in the form of a program.

Transductive approaches skip that step, they just go for it, generate the output based on some prior knowledge (more along the lines of just giving an LLM the examples and hoping it does some in-context learning). They do not offer an explanation [ 2 ].

I don't think this is a well defined distinction and gets particularly blurry when looking at some of the TTT (test-time-training) fine tuning approaches, and also with thinking language models. It also depends on where you draw the line around a system.

For the TTT case, if you think of an LLM and its weights as a program [ 3 ] - and then at test time you fine-tune your weights to better reproduce the example problems - you are clearly doing some sort of program inference, it's just not on a discrete program. Something to this effect is noted in the technical report:

Interestingly, TTT can be seen as conceptually similar to program search, albeit at the opposite end of the memorization/recombination spectrum.

One difference here however, is that a fine-tuned model offers no easily interpretable explanation.

One of the results called out in the technical report is the value of ensembling both transductive and inductive methods, which was the main point of one of the papers below. Ensembling across both methods was crucial to get to the top of the leaderboard in most cases [ 4 ].

Ensembles are a mainstay of ML, and frequently pop up in winning kaggle competitions. If you want a good score, this is a relatively cheap way to boost it. Notably, 2 of the leaderboard results in 2024 were ensembles of previous years submissions.

Some of the papers discussed below make some interesting observations about what ensemble well together (e.g a mix of transductive and inductive learners), but the mechanics of ensembling are relatively simple so there isn't a tremendous amount to dig into here.

Improvements in DSLs was a significant driver of progress early on in the first few years of ARC being released. Michael Hodel authored ARC-DSL, one of the original DSLs for ARC, as an example that is still relevant and useful today.

A good DSL can greatly improve search efficiency, given it is what we search over. Pure Python is not a great language for brute-force search as most programs are completely irrelevant[ 5 ]. A good DSL for the domain can help ensure that solutions can be represented in a small program, and that most parts of the search space are actually valid programs that operate on grids.

One challenge with most of the DSLs is that they are hand-coded. The author of a DSL might go and solve all the ARC training tasks, and then produce a set of language primitives that are necessary to solve all the problems they have seen. Whilst this makes the search efficient, it's hard to guarantee they are complete. The ability to solve all the training tasks does not necessarily mean they have the ability to solve all of the test time tasks! This needs to be a primary objective of any DSL, be expressive enough whilst also providing a small yet useful set of primitives.

The DSLs often end up being rather large, for example, ARC-DSL contains a massive 160 primitive functions!

If you want to learn more about the construction of a DSL, then the ARC-DSL write-up is probably a good place to start. Probably avoid writing one yourself unless you are doing something very novel, although a number of the papers below do end up doing this.

It is almost certainly the case that there is some transformation of the grid representation of ARC problems that makes them easier to solve than working on the grids directly themselves. Whether this is hard-coded, learned or otherwise, such a representation likely exists.

Parsing ARC grids into objects has been explored in some of the papers, e.g:

Representations for the ARC grids have been explored all the way back in 2022. The intuition here is probably that by mapping to some better data structure for representing the grids, the DSL becomes simpler, and the search process more efficient for programs that operate on those grids.

┌──────────┐ ┌──────────┐ │ Input │ │ Output │ │ Grid │ │ Grid │ └────┬─────┘ └────▲─────┘ │ │ ▼ │ Encoder Decoder │ ▲ │ │ ┌────▼─────┐ ┌────┴─────┐ │ Abstract ├───────────► Abstract │ │ Rep │ │ Rep │ └──────────┘ Solve └──────────┘ this problem!

Representations can be related to the DSL itself but don't have to be. When treated as an explicit outer process, this approach is rather flexible, as these representations can then be fed into many different systems including LLMs, where for example one person claimed a doubling of O1 performance by mapping ARC grids into an abstract, object centric representation using a set of hand crafted heuristics.

Some of the work around these explicit abstract representations has been heuristic or hard coded, but sometimes compact representations of the grids into some other representation is actually an explicit part of the learning objective.

For some of the other approaches, while there may not be an explicit abstraction step, it's reasonable to assume that the layers of a neural net in say a fine-tuned LLM is actually learning such representations implicitly.

The idea of refinement comes up in a few of the papers. If we think of ARC in general as a grid > grid transformation problem, then one obvious thing we can do to break this down a bit is to try to construct the set of repeated transformations through partial grids:

┌─────────┐ │ Input │ └────┬────┘ ├──────────────────────┬──────────────────────┐ │ │ │ ▼ ▼ ▼ ┌─────┐ ┌─────┐ ┌─────┐ │ │ ┌─────────┐ │ │ ┌─────────┐ │ │ null ──►│ f() ├──►│ Partial ├─►│ f() ├──►│ Partial ├─►│ f() ├──► │ │ │ output │ │ │ │ output │ │ │ └─────┘ └─────────┘ └─────┘ └─────────┘ └─────┘

This can come in a few different forms for different approaches. For example with a LLM/VLM, we might repeatedly apply the LLM, providing partial grids as additional context after step 0, maybe even explicitly asking the LLM to correct any mistakes.

In some approaches there is a notion of grid similarity, and this can be a useful signal for training.

Ultimately allowing repeated applications of some function over grids can enable types of computation that might not be possible in say a single pass through a transformer, or any fixed compute function. Many ARC problems become much easier if you repeatedly apply some function, and this is usually helpful when there is some linear dependence between computational steps.

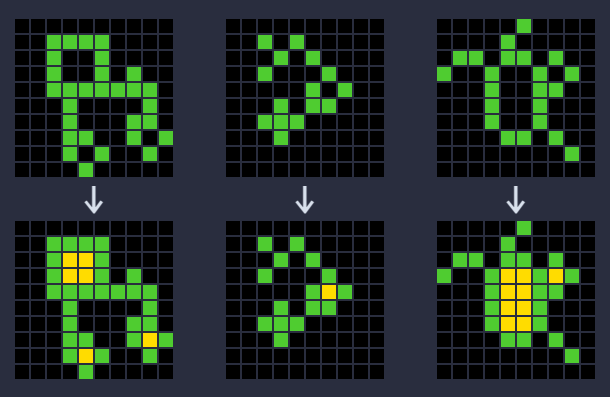

For an example of a problem that illustrates the importance of such iterative computation:

▼▼▼▼▼

This transformation function is much easier to write as a loop!

Thinking models, O3, inference time compute #

This wasn't covered in the technical report as it happened after in December, when OpenAI launched O3 with the announcement that it achieved an 87.5% score on ARC-AGI-1, on the semi-private eval set.

Just in June, the narrative was still that solving ARC-AGI would be extremely hard. This has totally flipped on its head in just a few months. Even those bullish about rumors of Q* and other reasoning approaches would not have expected this level of success.

This was an exceptional result, and even those labelled DL skeptics took a moment to acknowledge its importance. We have to take this seriously, and since O3, as well as DeepSeek, many people will be looking to leverage thinking models to approach ARC 2025.

Emulating and dramatically optimizing whatever OpenAI cracked for O3 would be a completely valid, and viable approach for anyone pursuing ARC in 2025, and it seems that several groups are already planning to do this, as Mike Knoop mentions at the end of his R1 analysis.

Letting LLMs think before answering is obviously a powerful approach:

It enables dynamic inference time computation . As mentioned above regarding refinement, this can be very useful for some puzzles.

. As mentioned above regarding refinement, this can be very useful for some puzzles. There is still some discussion about what is under the hood, but there is some form of inference time scaling supported from thinking models, where multiple reasoning traces can be followed in parallel.

supported from thinking models, where multiple reasoning traces can be followed in parallel. Thinking models are more akin to running programs, reasoning programs nonetheless. The ARC team refer to this as reasoning synthesis .

. They can attempt to verify their answers, backtrack when they go wrong, and follow logical steps. This is very useful for a domain such as ARC.

At a high level, thinking tokens can form some kind of not entirely discrete program code and this is in my opinion a big part of what makes them so powerful. I've written a little more about this idea here.

Due to the fact that ARC is a verifiable domain it should be possible to use RL to train a thinking model specifically to solve ARC puzzles. If done right this can cause models to learn how to think about ARC tasks in a programmatic way, rather than just memorizing all of the patterns of the test set. The hope is this will greatly improve generalization ability. It might even be the case that the in-context learning of these models is a sufficient form of test time adaptation.

Training these models is fiddly, and probably expensive. I've done a little bit of exploration into this here (although this work is already likely well out of date), and there are now a lot of people in the open-source community building tools and doing research on replicating DeepSeek's RL result, with some successes on simpler domains than ARC even with small models.

The immediate challenge for these approaches is context lengths. Puzzle context itself can be 6K tokens or more, and for a decently sized thinking trace, you may want another 10-30K tokens or more! This is hard to fit on limited compute resources, but people are working on optimizing this.

Whilst O3 performed well on ARC-AGI-1, the new version of the competition slashes O3-low performance from 76% to ~4% (although it still holds the top spot on the unrestricted-compute leaderboard).

I expect to see an ARC optimized thinking model be one of the top scoring approaches for this year's competition, but it will require significant